Mind Meets Machine: Constructivism, Emergent Intelligence, and the Rise of LLMs [Part 1 of 3]

A Trilogy to Uncover How Human Learning Shapes AI – and Vice Versa

This trilogy is designed to guide educators and curious minds through the journey of how machines learn, in ways that parallel human learning processes. In Part 1, we set the foundation by exploring fundamental learning theories—behaviorism and cognitivism—and how they relate to early machine learning models. Part 2 dives deeper into constructivism and emergent intelligence, showcasing the evolution of machine learning and the rise of large language models. Finally, Part 3 takes us beyond mimicry, examining self-correction, multiple intelligences, and the future of machine learning. Follow along to see how each part builds upon the last, shedding light on how AI strives to replicate, and perhaps enhance, human cognition.

For whom this is for?

In an era where artificial intelligence is becoming an integral part of our classrooms and daily lives, understanding how machines learn is no longer optional—it's crucial. The rapid rise of AI demands that educators make sense of it not just as a technology, but as a learner. How can we, as educators, harness this tool if we do not understand its processes? By drawing parallels between human learning theories and machine learning, we bridge the gap between abstract computation and tangible educational experiences. This connection holds the potential to transform not only how we use AI to enhance learning and teaching, but also how we perceive learning itself.

(Here is the illustration combining constructivism as a learning theory and machine learning models. Generated by ChatGPT)

If you find these ideas intriguing, consider subscribing and sharing with others who might also be captivated by the convergence of education and technology.

Part 2: Building Minds - Constructivism, Emergent Intelligence, and the Rise of LLMs

Evolution of ML and Its Biological Parallels

What differentiates humans from machines? What distinguishes different levels of machine intelligence? And why is the development of large language models (LLMs) considered a paradigm shift?

Emergent abilities in large language models refer to complex skills or behaviors that are not directly programmed but arise from the model's extensive training on diverse datasets. These abilities manifest in tasks like writing poetry, answering nuanced questions, or providing logical explanations—tasks that require more than just basic pattern recognition or classification. Unlike conventional machine learning (ML) or deep learning (DL), which often focus on recognizing patterns and making predictions based on explicit training, LLMs demonstrate an ability to apply learned concepts in novel situations, suggesting a level of adaptability and generalization that goes beyond traditional approaches. This emergent behavior marks a significant departure from earlier models that merely classified data or mimicked observed patterns, representing a true paradigm shift in artificial intelligence capabilities.

To explore these questions, consider the analogy of a two-year-old learning their first words compared to a nine-year-old solving mathematical problems. A two-year-old is primarily absorbing information through observation and imitation—learning to recognize sounds, words, and simple associations. For instance, when a parent points to a cat and says, "cat," the child begins to connect the word to the furry animal. This is similar to early phases of machine learning, where models are trained to recognize and reproduce patterns based on explicit training data (as we have discussed it in Part 1). These initial models, like the classic image recognition algorithms, learned to identify objects by being fed thousands of labeled images. This kind of learning aligns with behaviorism, which focuses on observable behaviors shaped by reinforcement and repetition. But is this intelligence? When do we say that a child is truly intelligent? Is it merely the ability to recognize and repeat, or is it the capacity to solve new problems and adapt? Behaviorism may explain the early stages, but as learning progresses, cognitivism becomes relevant, emphasizing how information is processed, stored, and applied.

However, as a child grows older, they move beyond simple mimicry. By the age of nine, a child is able to solve simple mathematical problems, such as calculating the area of a rectangle or understanding fractions. This ability demonstrates that the child is not just repeating what they were taught but can apply learned concepts in new contexts. This involves reasoning, critical thinking, and constructing new knowledge from their experiences—key characteristics of constructivism. Constructivism emphasizes that learning is an active, contextualized process where learners construct new ideas based on their current and past knowledge. A key concept within constructivism is Vygotsky's Zone of Proximal Development (ZPD), which refers to the range of tasks that a learner can perform with the guidance of a more knowledgeable individual but cannot yet perform independently. The role of teachers in constructivism is crucial—they act as facilitators or guides who help learners navigate through their ZPD, providing the necessary support to bridge gaps in understanding. Unlike behaviorism, which deals with conditioning, or cognitivism, which emphasizes the processing of information, constructivism is about building new understanding through active engagement and problem-solving. For example, in a real-life classroom setting, a teacher might encourage students to work on a group project where they have to research, discuss, and present their findings on a topic. This process allows students to construct their own understanding through collaboration and interaction, making learning more meaningful and personalized.

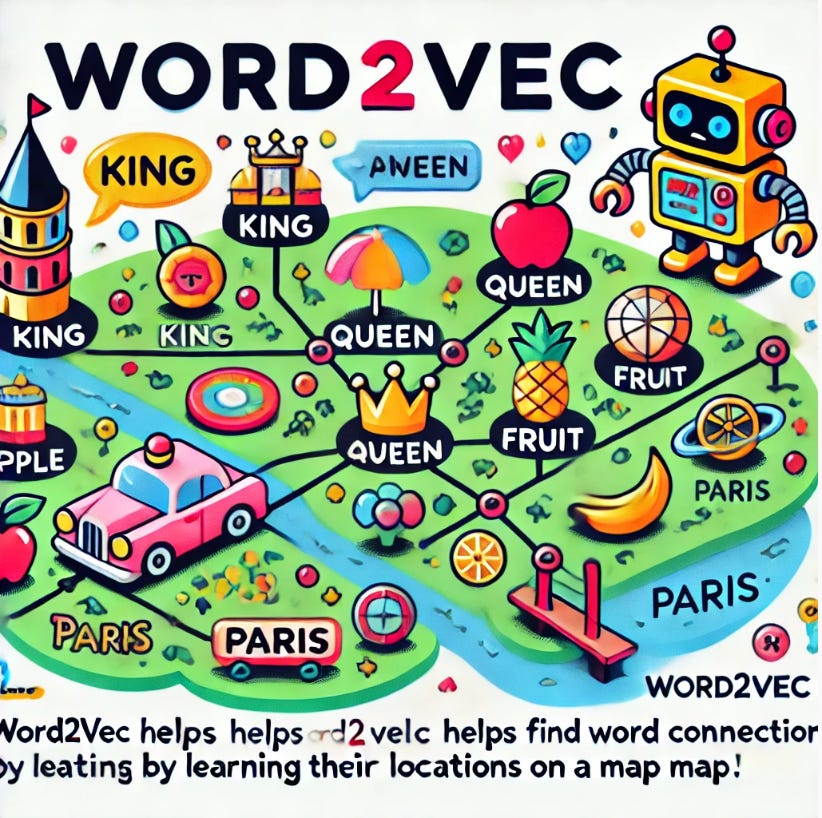

(Here's the illustration explaining Word2Vec in a simple and colorful way for a 12-year-old. Generated by ChatGPT)

In computer science, the concept of constructivism can be illustrated through Word2Vec. Word2Vec is a model that represents words in a continuous vector space based on their context in large datasets. Instead of simply categorizing words, Word2Vec learns the relationships between words through their usage, effectively constructing a nuanced understanding of language. For example, the word vectors for 'king' and 'queen' end up being related in a way that reflects their real-world associations, demonstrating how the model constructs meaning based on context, akin to human learning through experience. Teachers can use the association method to teach new vocabulary in a foreign language by connecting new words with familiar concepts. For instance, when teaching the word 'gato' (Spanish for 'cat'), a teacher might show a picture of a cat, use gestures, and relate it to the students' existing knowledge of cats in their native language. This helps students form mental associations and construct meaning based on both visual cues and prior knowledge.

However, the essence of constructivism is that learning, or knowledge, is subjective. The same word—such as "happy," "red," "thinking," or "Plato's philosophy"—can mean different things to different people, as the perception and meaning are constructed by individuals in conjunction with their surroundings. This subjectivity poses a new challenge for computer scientists: now that machines generate meaning, can it be subjective? What does it mean for machines to "make sense" of the world? Do they actually possess complex reasoning abilities like those attributed to advanced language models such as ChatGPT, Claude, and other modern buzzwords? If these models do exhibit some form of understanding, how do they develop it, and what processes enable humans to train machines to acquire this "understanding"?

Note: Behaviorism, cognitivism, and constructivism were proposed at different times and in a sequential manner. However, this does not imply that human cognitive development or human learning experiences follow a strict sequential order or are hierarchically distinct from each other. In practice, these theories can occur in parallel or simultaneously, with different aspects of learning drawing from behaviorist reinforcement, cognitive information processing, and constructivist knowledge construction at the same time. In contrast, the development of machine learning tends to be more linear and accelerating, with each phase building upon the previous one to create increasingly sophisticated models.

What Differentiates LLMs: The Emergence of Human-Like Intelligence

Emergent abilities in large language models (LLMs) like GPT-3 or Claude represent a leap beyond simple pattern recognition. These abilities are evident when models generate coherent essays, translate languages, compose poetry, or engage in complex dialogue—tasks that go far beyond mere mimicry. Unlike traditional machine learning (ML) or deep learning (DL) models, which are trained for a single, well-defined task like image classification or sentiment analysis, LLMs are inherently task-agnostic, able to handle a wide range of tasks without being explicitly retrained for each one.

For instance, in conventional ML approaches, a model trained to recognize handwritten digits could not be used for natural language translation without significant retraining. Each specific application required a distinct model, carefully crafted for that particular use. LLMs, however, leverage their exposure to vast amounts of diverse data to generalize across different types of tasks. They have learned a kind of flexible "understanding" that allows them to perform many different roles—from answering trivia questions to generating creative fiction—without being explicitly trained for each one. This flexibility is a key aspect of emergent abilities (paper link): models do not just apply memorized patterns but adapt dynamically to new contexts, even those that were not part of their original training data. Consider a classroom scenario where a teacher uses project-based learning to teach problem-solving skills. Students are given an open-ended problem, like designing a sustainable city. This task requires them to apply knowledge from different subjects—geography, math, and social studies—much like how LLMs draw from diverse datasets to perform new tasks. The students must adapt their knowledge to solve novel problems, similar to how LLMs generate appropriate responses to queries they have never seen before.

Another crucial difference lies in the model's adaptability post-training. Traditional ML models, once trained, are static; their capabilities are fixed until they are retrained with new data. This retraining process can be computationally expensive and time-consuming. In contrast, LLMs exhibit a form of dynamic learning through interaction. By analyzing the context of the conversation and the responses from users, LLMs can effectively "self-correct" during ongoing interactions. For example, if an LLM misunderstands a user's request, it can adjust its response based on the feedback it receives, resulting in more accurate and context-aware answers over time. A similar concept can be seen in real-life learning environments. Imagine a language teacher correcting a student's spoken mistake during a conversation. The student listens, adjusts their understanding, and attempts to use the corrected form in future conversations. This dynamic correction and adaptation are akin to how LLMs refine their behavior based on user interactions, making both students and LLMs more adept at handling similar situations in the future.

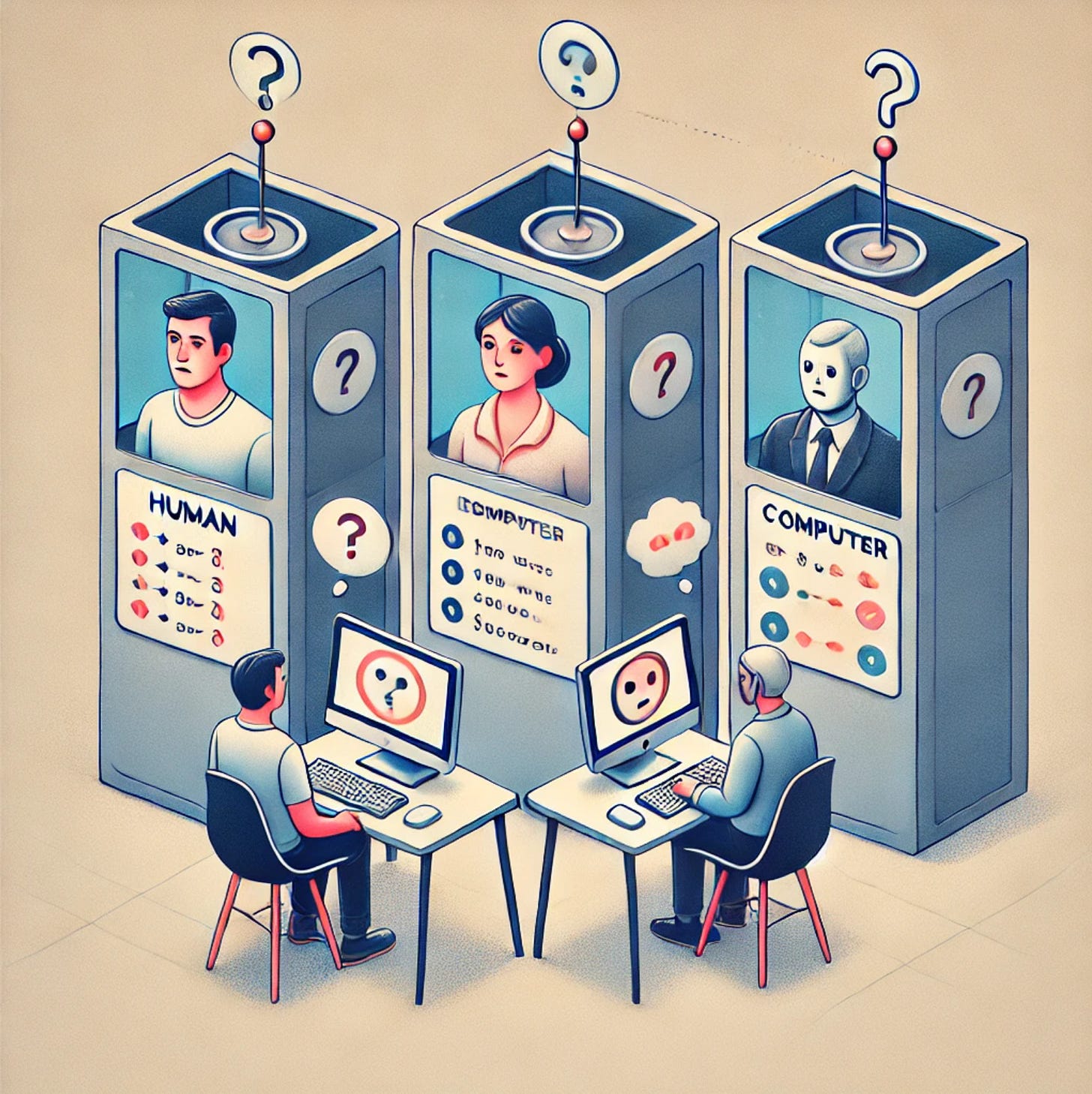

Emergent abilities also bring these models closer to what is often referred to as "passing the Turing Test"—a benchmark for assessing a machine's ability to exhibit intelligent behavior that is indistinguishable from that of a human. In the past, simple chatbots or early ML models could only manage scripted or rigidly defined interactions, making it easy for users to identify them as non-human. Modern LLMs, however, are much harder to differentiate from a human responder because of their emergent abilities to engage in fluid, context-aware, and nuanced conversation. They can answer follow-up questions, understand ambiguity, and even recognize shifts in conversation tone or intent—all hallmarks of emergent intelligence.

For product managers in education and EdTech, this shift represents a profound opportunity. The task-agnostic nature of LLMs means they can be integrated into learning platforms to provide diverse educational support—from answering specific student questions to helping generate personalized learning content—all within a single framework. Their ability to self-correct and adapt also means that LLMs can learn from student interactions, providing tailored responses that evolve based on user behavior, thus enhancing the learning experience over time. Unlike traditional educational AI systems that required constant manual updates and retraining, LLMs bring a new level of adaptability and scalability that can significantly reduce maintenance overhead while increasing the quality of interaction with learners.

In essence, emergent abilities mark a paradigm shift by enabling models to break free from the limitations of single-purpose training and fixed knowledge. Instead, LLMs have become general-purpose tools capable of nuanced understanding, fluid adaptability, and performing a diverse array of tasks—all of which hold enormous potential for reshaping how we think about AI in education.

How does LLM emerge with perceived intelligence?

Consider the difference between a parrot that repeats what it hears and a crow that uses tools to solve a problem. The parrot, much like early machine learning models, can imitate sounds without understanding their meaning. The crow, however, demonstrates reasoning and problem-solving skills—an intelligence that goes beyond simple imitation. For example, crows have been observed using pebbles as tools to raise the water level in a container to reach food or to break nuts for food, showcasing their ability to solve problems in innovative ways. Modern LLMs, like GPT-3 and beyond, are moving toward this kind of intelligence. They can draw on vast amounts of contextual knowledge to generate meaningful responses, demonstrating a form of reasoning that is more akin to human cognitive abilities. For instance, when asked to provide a summary of a complex scientific paper, GPT-3 can generate a coherent and informative overview, something that requires understanding the broader context and extracting relevant information.

Training an LLM with vast datasets resembles "crystallized intelligence" in humans—the accumulation of knowledge and facts. For example, an LLM trained on millions of Wikipedia articles develops a vast store of factual information. However, fluid intelligence—the ability to think abstractly and solve new problems—is something LLMs can only approximate through their internal algorithms. Unlike humans, who can adapt quickly to new information and situations, LLMs often require extensive retraining to adjust their understanding. For example, while a human can learn a new concept from a single example, an LLM may need thousands of new data points to update its knowledge base effectively.

Pretraining and Reinforcement Learning for LLM

The evolution of LLMs involves a two-stage learning process: pretraining and reinforcement learning. This process can be compared to a student going through different phases of their education:

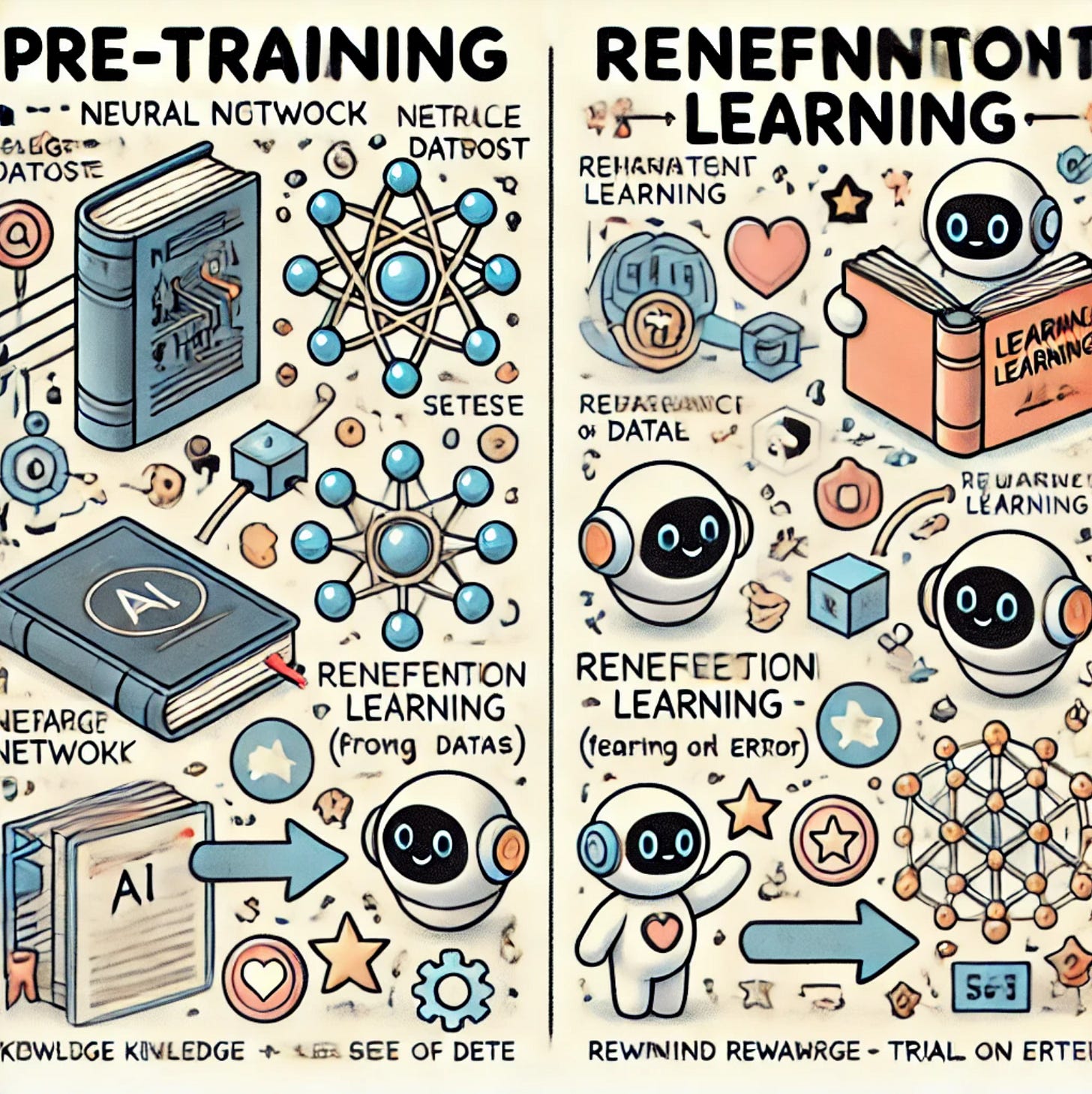

(Here's the illustration depicting pre-training and reinforcement learning. Generated by ChatGPT)

Pretraining is akin to early childhood and primary education—immersing the model in a broad range of subjects and contexts without a particular task in mind. During pretraining, the LLM learns patterns, language structures, and basic concepts by being exposed to an extensive dataset of web pages, books, and articles, similar to a student gaining foundational knowledge. For example, just as a child learns about the world by reading storybooks and listening to conversations, an LLM like GPT-3 learns about language, culture, and facts by processing billions of words from diverse sources. This stage can be compared to the "learning to read" phase in human education, where the emphasis is on acquiring fundamental skills that allow for independent learning.

Reinforcement Learning is similar to vocational training or specialized education, where learning is adjusted based on feedback and specific goals. In LLMs, this stage involves fine-tuning and Reinforcement Learning with Human Feedback (RLHF) to refine its abilities for specific tasks and improve its responses. This phase is akin to the "reading to learn" stage in human education, where students use their reading skills to explore new subjects, solve problems, and engage in higher-order thinking. For example, reinforcement learning is used to help models like ChatGPT become better conversationalists by incorporating feedback from human trainers who rate the quality of its responses.

The progression from GPT-3 to ChatGPT highlights this educational analogy. GPT-3 initially gained foundational capabilities through pretraining, such as language generation, world knowledge, and in-context learning. These abilities emerged from the large training corpus of 300 billion tokens, which included diverse sources like web data, books, and Wikipedia. The origins of in-context learning remain somewhat elusive, but it likely emerged from the sequential data used during training, much like how children learn patterns and associations by observing sequences of events in their environment.

Training on Code and Instruction Tuning introduced new abilities, highlighting that the content and types of training data matter significantly. For instance, training on code datasets allows models to learn structured problem-solving approaches, while instruction tuning helps them better understand and follow human directives, such as:

Instruction Following: Enabled by instruction tuning, allowing the model to generate contextually relevant responses. For example, when asked, "Explain the Pythagorean theorem in simple terms," ChatGPT can provide an age-appropriate explanation that breaks down complex concepts into digestible pieces.

Generalization to New Tasks: Achieved by scaling the diversity of instructions. For instance, when prompted to write a recipe, create a poem, or solve a logic puzzle, the model can adapt its response based on the nature of the task, similar to how a student learns to apply their math skills in physics or economics.

Complex Reasoning with Chain-of-Thought (CoT): Possibly a byproduct of training on large code datasets, as the structured nature of programming aligns well with task decomposition. For example, when asked to solve a multi-step math problem, ChatGPT can break down the solution into individual steps, providing a chain of reasoning that mirrors how a student might show their work on a math test.

This aligns with human cognitive development, where gaining structured knowledge and engaging in problem-solving activities leads to deeper understanding. The structured nature of programming languages helps LLMs acquire skills like logical reasoning and decomposition—similar to how human learners develop problem-solving skills through activities like learning algebra or programming.

Summary of Part 2

In Part 2, we explored the evolution of machine learning models, focusing on constructivism, emergent intelligence, and the rise of LLMs. We examined the progression from GPT-3 to ChatGPT, highlighting how pretraining and reinforcement learning serve as foundational and specialized phases of development. Instruction tuning and training on code have played key roles in expanding the capabilities of these models, moving AI closer to the complex, adaptive learning seen in human cognition. As LLMs continue to evolve, they are beginning to exhibit forms of emergent intelligence that push the boundaries of what machines can do, from following complex instructions to solving problems in creative ways.