Mind Meets Machine: Exploring the Intersection of Learning Theories and AI[Part 1 of 3]

A Trilogy to Uncover How Human Learning Shapes AI – and Vice Versa

This trilogy is designed to guide educators and curious minds through the journey of how machines learn, in ways that parallel human learning processes. In Part 1, we set the foundation by exploring fundamental learning theories—behaviorism and cognitivism—and how they relate to early machine learning models. Part 2 dives deeper into constructivism and emergent intelligence, showcasing the evolution of machine learning and the rise of large language models. Finally, Part 3 takes us beyond mimicry, examining self-correction, multiple intelligences, and the future of machine learning. Follow along to see how each part builds upon the last, shedding light on how AI strives to replicate, and perhaps enhance, human cognition.

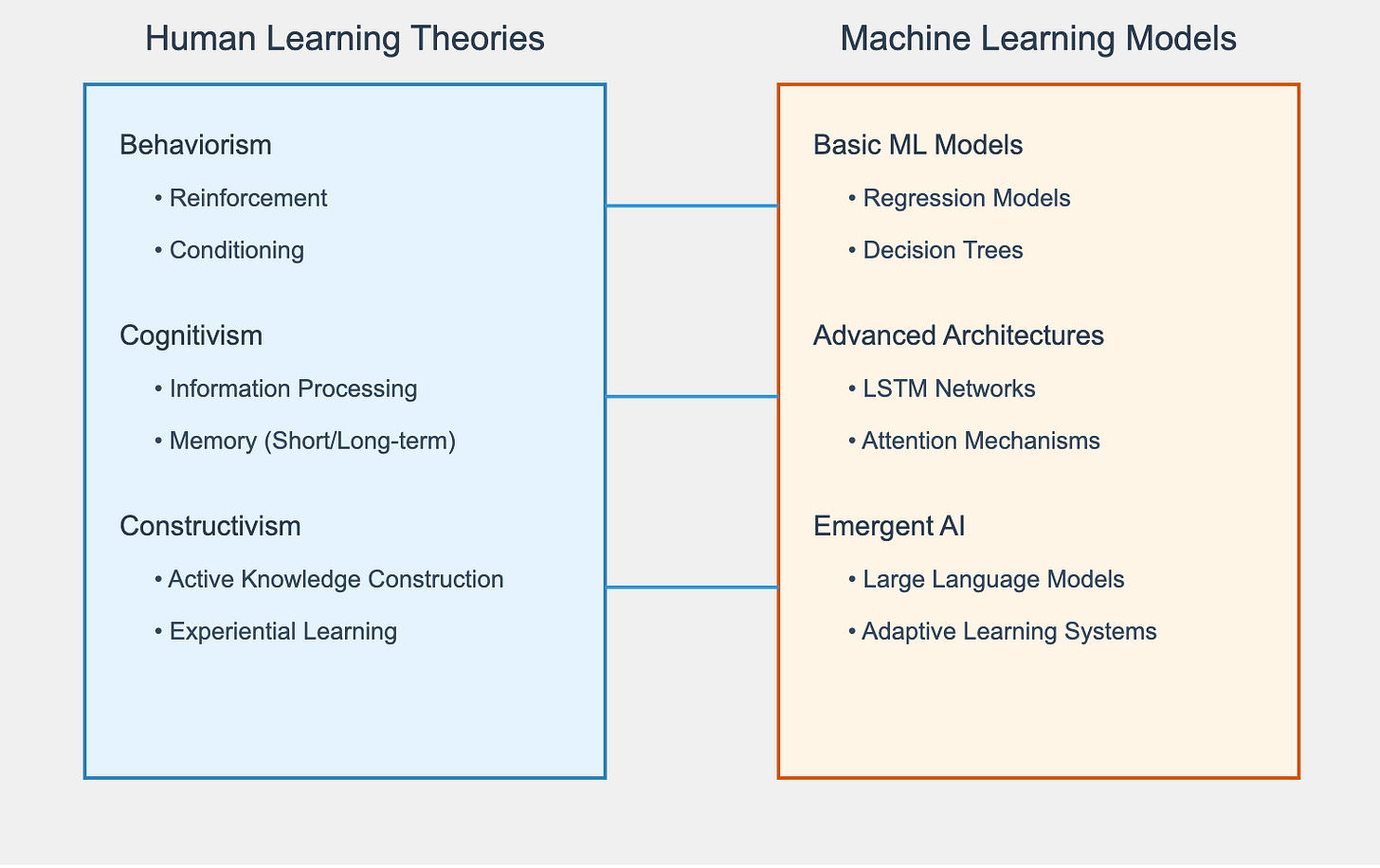

Summary of Comparison between Human Learning Theories and Machine Learning Models (I sent my trilogy draft to Claude artifact and ask for visualization)

For whom this is for?

In an era where artificial intelligence is becoming an integral part of our classrooms and daily lives, understanding how machines learn is no longer optional—it's crucial. The rapid rise of AI demands that educators make sense of it not just as a technology, but as a learner. How can we, as educators, harness this tool if we do not understand its processes? By drawing parallels between human learning theories and machine learning, we bridge the gap between abstract computation and tangible educational experiences. This connection holds the potential to transform not only how we use AI to enhance learning and teaching, but also how we perceive learning itself.

If you find these ideas intriguing, consider subscribing and sharing with others who might also be captivated by the convergence of education and technology.

About me: A Programmer's Perspective on Human Learning

From Software Engineering to Educational Innovation

During my journey as a software engineer over the past five years, while pursuing my EdD, I found it eye-opening to explore the educational field. With hands-on experience in software development and a deep dive into learning theories, I've had a unique opportunity to bridge two worlds: technology and education.

The learning theories were particularly refreshing—how academia extracts patterns and codifies the human learning process. Reflecting on common experiences, like learning to use a new programming language or following a specific recipe to make a new dish, brought new insights, and it helped me understand not just how I learn, but also how others do. By contrasting human learning theories with the evolution of machine learning (ML), we can uncover how artificial intelligence, like large language models (LLMs), strives to mimic human cognition—sometimes quite successfully, and sometimes less so.

Part 1: The Foundations of Learning - Behaviorism, Cognitivism, and the Birth of Machine Intelligence

Introduction: Connecting Human Learning Theories with Machine Learning Models

Learning is a fundamental process shared by both humans and machines, yet the ways in which they learn are markedly different. In education, three major learning theories—behaviorism, cognitivism, and constructivism—provide a foundation for understanding human learning processes. These theories explain how individuals acquire knowledge through reinforcement, information processing, and active engagement with their environment. Similarly, machine learning (ML) models, including neural networks, decision trees, and large language models (LLMs), have their own ways of 'learning' from data. By examining these connections, we can better understand how ML models like ChatGPT aim to replicate aspects of human cognition.

Learning Theories: How Humans Learn and How Machines Mimics (to learn)

Behaviorism: Conditioning Humans and Training Machines

Humans learn through a combination of experience, instruction, and reflection—all mediated by our biological and cognitive systems. One foundational learning theory that explains human learning is behaviorism. Behaviorism focuses on observable behaviors and how they are shaped by reinforcement or punishment. The classic example of conditioning still holds powerful lessons for understanding both human and machine learning. It suggests that learning occurs through repeated interactions with the environment, where positive outcomes reinforce behaviors and negative outcomes discourage them. This is directly related to assessments in education, where correct answers are rewarded, and incorrect ones are corrected through feedback, thus conditioning learners over time.

Remember Pavlov and his dogs? (generated by ChatGPT)

Machine learning models, on the other hand, go through a similar process called training, which mimics the learning and feedback loop of students. Just like students receive feedback to improve their understanding, ML models are trained iteratively, adjusting based on feedback (in this case, error reduction) to enhance performance. In the context of behaviorism, ML training can be seen as a form of reinforcement—adjusting model parameters to minimize errors, similar to how behavior is shaped by rewards and corrections. For instance, regression models or decision trees rely heavily on relevant data that must align closely with the task at hand. Just as behaviorism emphasizes conditioning, ML models require task-specific data to stay relevant—often necessitating a separate model for each distinct task. Who and where is the teacher in this case? The data itself, along with the final labels—annotations that indicate the correct answer or desired outcome—and the iterative process of refining the model, serve as the 'teacher,' constantly providing feedback and guiding adjustments.

Based on this analogy of machine learning and human learning, we can consider how AI might enhance learning and teaching in real educational settings. For instance, adaptive learning platforms use similar reinforcement principles to personalize the learning experience for students. By analyzing a student’s responses and providing timely feedback, these platforms can reinforce positive learning behaviors, much like a teacher tailoring their approach based on individual student performance. Tools like Khan Academy and Duolingo leverage these techniques, adjusting the difficulty level and type of problems presented based on how well the learner is performing, ensuring an optimal path for skill mastery.

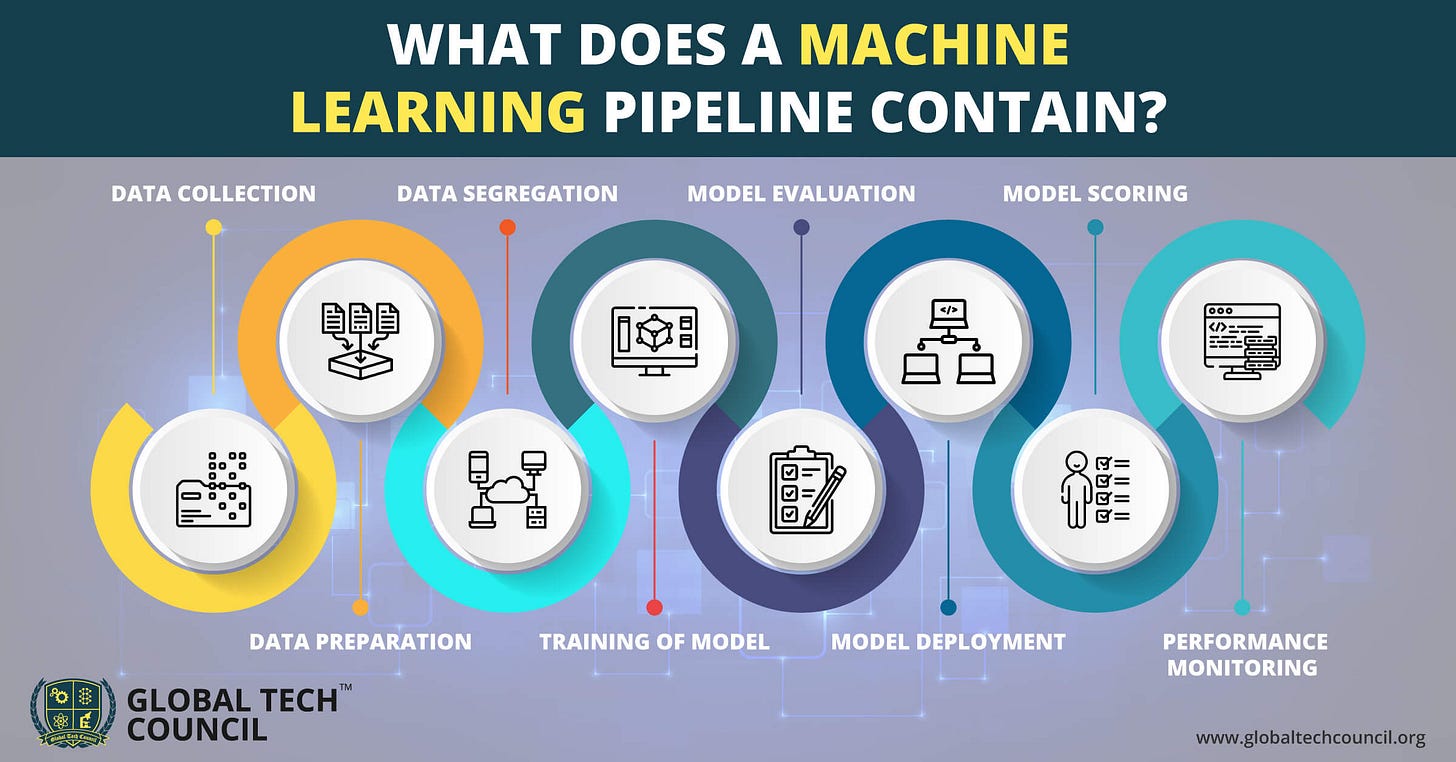

Training these models involves a structured pipeline: first, data collection, where relevant examples are gathered to train the model—similar to a teacher preparing examples for a lesson. Next comes data preprocessing, where the data is cleaned and formatted, much like a teacher organizing lesson materials for clarity. Then comes model training, where the model learns patterns through iterations that adjust its internal parameters based on error feedback, akin to a student receiving corrections after each practice exercise. Finally, the model goes through evaluation to ensure that it performs well on new, unseen data, similar to how a student takes a test to demonstrate mastery of a topic. This process mirrors how behavior is shaped through iterative reinforcement—successive adjustments lead to better alignment with the desired outcome, just as students gradually improve through practice and feedback in the classroom.

(source:https://images.app.goo.gl/DWG48Xfwp41dNykn6)

Similarly, educators can utilize AI to automate parts of the learning and feedback process, providing instant evaluations and hints, much like a student receiving feedback from a tutor. This has been particularly useful in large-scale educational contexts, such as MOOCs (Massive Open Online Courses), where AI-driven assessment tools can help ensure that students receive individualized attention even in a class of thousands. For example, automated grading tools for written assignments can give real-time suggestions, allowing students to correct mistakes while the learning is still fresh.

However, despite their effectiveness, these models have significant limitations. Behaviorism-based approaches, like regression models and decision trees, can only capture explicit relationships within the data and require highly task-specific data to remain relevant. Imagine a student who has memorized all the steps to solve a specific math problem but struggles to apply those steps to a slightly different problem—that's similar to how these models operate. They lack the ability to generalize across varied contexts without extensive retraining, which restricts their adaptability. This limitation sets the stage for more sophisticated approaches, such as those based on cognitivism, which aim to mimic deeper, more flexible learning processes. In machine learning, this is reflected in the emergence of deep learning models, which attempt to go beyond simple associations and handle more abstract forms of learning and reasoning, akin to a student developing problem-solving skills that can be applied across various subjects.

Given these limitations, AI tools can be enhanced with features inspired by more sophisticated human learning approaches. For instance, AI-based tutoring systems can move beyond rote memorization by incorporating deeper questioning and promoting critical thinking, helping students not just memorize facts, but apply their knowledge in different contexts. This approach mirrors educational strategies like Socratic questioning, where students are encouraged to think critically and make connections, leading to more robust understanding. An example of this can be found in tools like Squirrel AI, which utilizes adaptive learning pathways to help students build on fundamental concepts and apply them to more complex problems.

This is a video I usually share with my students on “How Machines Learn”, which is based on Behaviorism’s interpretations. Fun to watch.

Cognitivism: Information Processing and Model Enhancements

Another major learning theory, cognitivism, emphasizes the role of internal processes in learning—how information is received, organized, stored, and retrieved by the mind. Cognitivism focuses on understanding the mechanisms behind knowledge acquisition, including the use of long-term and short-term memory, as well as managing cognitive load. This theory is reflected in machine learning models through concepts such as Long Short-Term Memory (LSTM) networks and Attention models.

Here is the illustration of cognitivism, showcasing how learning involves mental processes like memory, attention, and problem-solving.

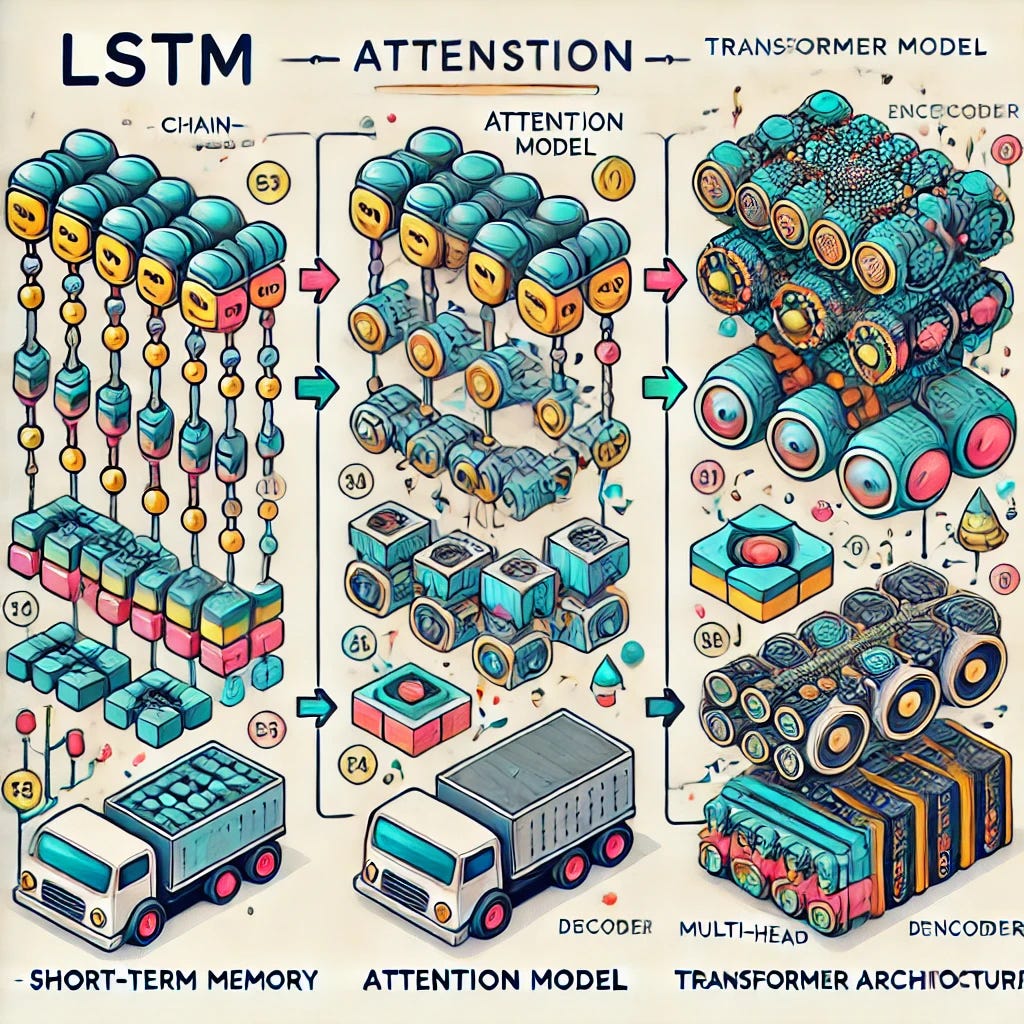

LSTM networks are designed to handle sequences of data and remember important information over time, akin to how humans use long-term memory to retain useful knowledge while engaging in new learning. LSTMs address the limitations of earlier neural networks by managing dependencies in data, much like how our minds keep track of relevant information over a longer duration. This capability allows LSTMs to understand context across time—whether in language, video, or other sequential tasks—enabling them to 'remember' previous information and make more informed decisions.

Attention models, such as those used in Transformer architectures, take the cognitivist concept further by managing and prioritizing information effectively. Attention mechanisms allow models to focus on the most relevant parts of the input data, similar to how humans manage cognitive load by filtering out distractions and concentrating on what is important. This selective focus improves learning efficiency and helps models make better sense of complex inputs, reflecting how our minds prioritize information for deeper processing and understanding.

This image illustrates the progression from LSTM to attention models and finally to transformer architecture. It visually highlights the key components and the evolution of these models in machine learning (generated by ChatGPT).

Based on the analogy between machine learning and human cognitive processes, AI can significantly enhance learning and teaching by helping educators manage cognitive load in students. For example, AI-powered tools such as adaptive reading assistants can help highlight and summarize key information, reducing the burden on students as they process large volumes of text. Similarly, AI systems that use attention mechanisms can identify the areas where students are struggling and provide focused support, much like a tutor guiding a student to prioritize their learning.

In machine learning, enhancing models with mechanisms like LSTMs and Attention is akin to equipping learners with better tools for processing and recalling information. Just as effective educational strategies help students retain knowledge and reduce cognitive overload, these advancements in ML architectures enhance a model's ability to learn, understand, and generate meaningful outputs based on the data it processes. In a classroom setting, these capabilities can be integrated into personalized learning systems that adjust instructional content dynamically. For example, tools like Quizlet use AI to help students focus on specific topics that need reinforcement, just as an attention model might emphasize relevant data. These systems help students not only retain information but also develop strategies for managing their cognitive load more effectively, leading to a more efficient and personalized learning experience.

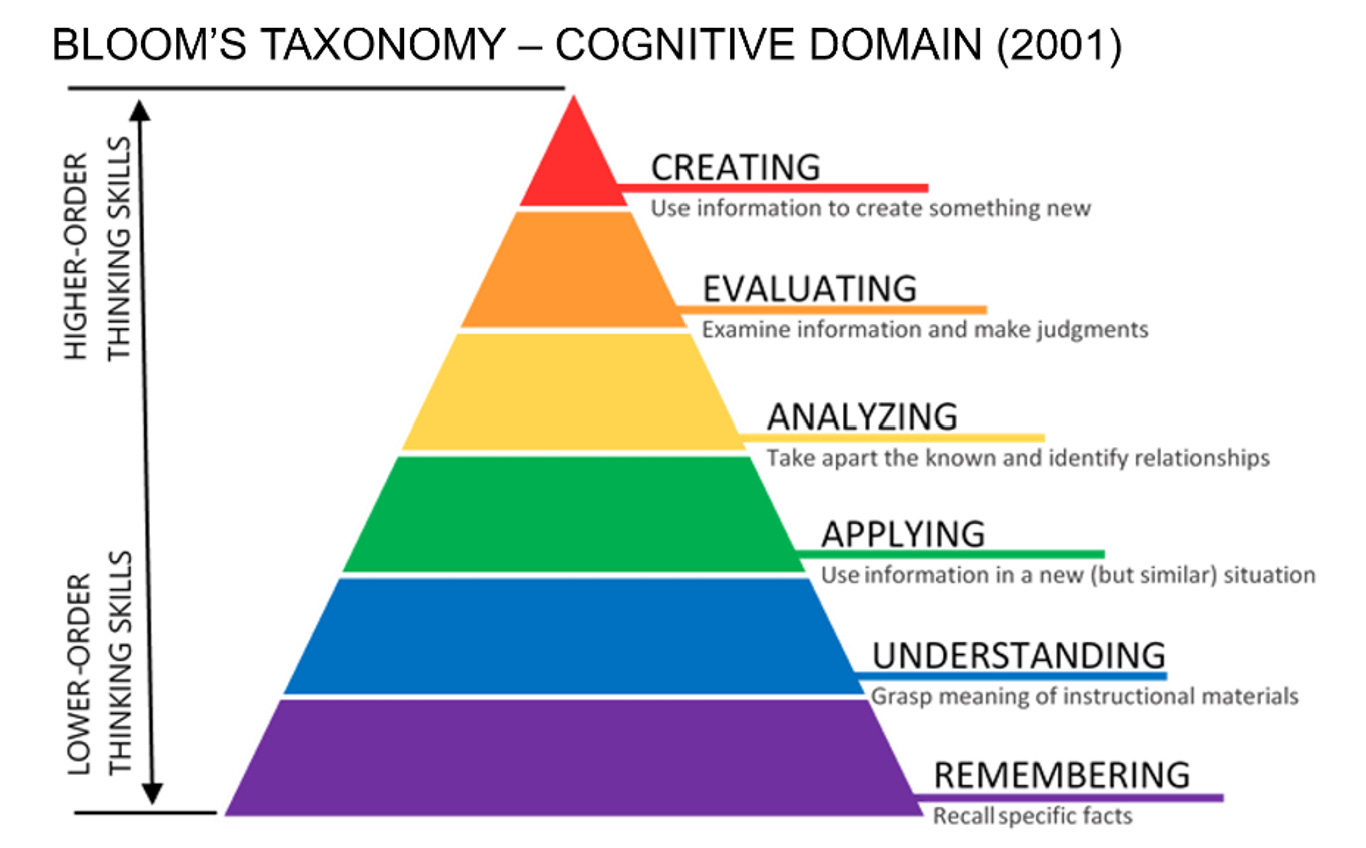

Higher-Order Processing: Moving Beyond Basics

Higher-order processing is a popular topic in the study of learning because it goes beyond simple memorization and repetition. It involves advanced skills such as critical thinking, problem-solving, and the ability to make connections between concepts. Understanding higher-order processing is crucial for educators and AI developers alike, as it helps bridge the gap between basic skill acquisition and the development of deeper, more meaningful understanding. For instance, imagine reading to learn versus learning to read. Initially, learning to read is about grasping the fundamentals of letters and sounds—essentially building the foundations for literacy. But reading to learn represents a higher-order process, where understanding goes beyond decoding words; it involves comprehension, inference, and critical thinking. This shift is not merely about gathering information but about processing it in ways that generate insights and facilitate problem-solving. It’s about connecting ideas, forming new interpretations, and building upon what’s already known—tacit, often subtle processes that make learning deeply meaningful.

(Source: Information Technology University of Florida)

In machine learning terms, this transition is like moving from processing raw text during pretraining to making nuanced inferences in specialized tasks during tuning. Just as humans evolve from recognizing letters to analyzing themes and generating new thoughts, models evolve from identifying language patterns to producing coherent and insightful outputs. However, traditional machine learning and even many deep learning models struggle to achieve this level of complexity. They often lack the adaptability and depth of understanding that human learners bring to higher-order tasks, as they rely heavily on predefined structures and training data.

I built an AI note application to support and facilitate higher-order thinking and visualization, please feel free to check it out.

The limitations of behaviorism and cognitivism, as well as traditional ML/DL models, become apparent when dealing with tasks that require deep understanding and adaptability. Behaviorism allows for basic conditioning and cognitivism provides internal structures for processing information, but both fall short in fostering emergent abilities—the active construction of knowledge and adaptability to novel situations—that human learners excel at. These limitations set the stage for the introduction of constructivism (in the upcoming Part 2, please subscribe if you want to learn more), a learning theory that emphasizes the importance of context, experience, and active engagement in learning. Constructivism moves beyond passive reception and internal processing; it focuses on how learners build their understanding through hands-on experience and dynamic interactions. This perspective aligns with modern educational practices that emphasize experiential learning, problem-solving, and the ability to adapt knowledge across different contexts.

In the same way, advanced AI systems are now evolving to incorporate elements of constructivism—attempting to simulate emergent abilities and dynamic learning. This is where emergent intelligence in large language models comes into play, moving AI closer to a form of learning that more accurately mirrors the rich and adaptive processes seen in human cognition.

Summary of Part 1

In Part 1, we explored the foundational learning theories of behaviorism and cognitivism, and how they relate to machine learning processes. Behaviorism provided a lens through which we understood the training of ML models as a form of reinforcement, similar to how humans learn through rewards and corrections. Cognitivism helped us see the role of internal processes, such as memory and information processing, in both human learning and ML advancements like LSTMs and Attention models. These parallels illustrate how machine learning is not just about raw data processing, but also about enhancing models with cognitive tools that make them more capable of understanding and generating meaningful insights.